Beyond Chain-of-Thought: The Evolution of AI Problem-Solving with Least-to-Most Prompting

Least-to-most prompting outperforms chain-of-thought in complex problem-solving.

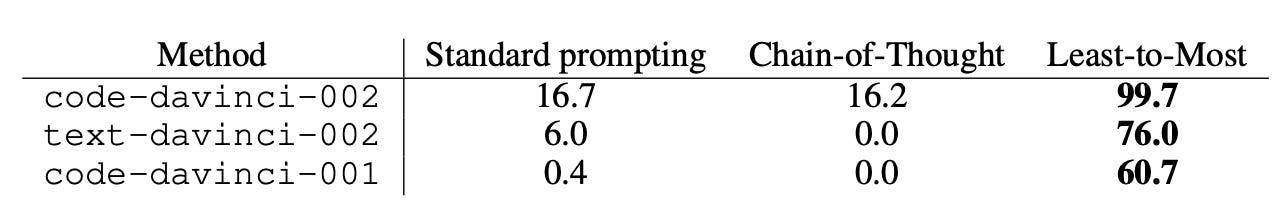

TL;DR: Least-to-most prompting applied to GPT-3 code-davinci-002, surpasses chain-of-thought methods in solving complex problems. This technique uses problem decomposition and subproblem solving, achieving 99% accuracy on the SCAN benchmark. This innovative approach demonstrates a leap towards deep learning systems with capabilities akin to human-like reasoning, bridging a significant gap in AI problem-solving.

In the evolving world of AI prompting, the ability to solve complex problems has been a perennial challenge. Traditional chain-of-thought prompting, while effective in various reasoning tasks, often falters when faced with complexities beyond its programmed examples. This limitation sets the stage for a groundbreaking solution: least-to-most prompting.

Bridging the Human-AI Gap

Deep learning and human intelligence have always been worlds apart. Humans learn and reason from minimal examples, a feat AI has struggled to replicate. Chain-of-thought prompting made strides in this direction, offering improved interpretability and performance, yet it still stumbled in generalizing to more complex tasks. This is where least-to-most prompting shines, aligning more closely with how humans tackle difficult problems.

The Rise of Least-to-Most Prompting

Designed to tackle the challenge of easy-to-hard generalization, least-to-most prompting is a novel strategy that dissects complex problems into simpler subproblems. These subproblems are then solved sequentially, leveraging the answers of preceding ones, without necessitating extra training. It mirrors a teaching technique from educational psychology, sequentially guiding learners to build upon simpler concepts.

Methodology and Execution

The process involves two stages: decomposition and subproblem solving. Initially, the problem is broken down. Then, using a blend of constant examples and previously solved subquestions, each subproblem is tackled in turn. The culmination of this approach is the resolution of the original complex problem, an elegant demonstration of sequential reasoning.

Experimental Results and Comparative Analysis

In practice, least-to-most prompting has shown remarkable results. When applied to the GPT-3 code-davinci-002 model, it reached a staggering 99% accuracy on the SCAN benchmark, significantly outperforming chain-of-thought's 16% accuracy. This impressive feat was achieved with just a handful of examples, highlighting the efficiency and effectiveness of this method.

Advantages and Limitations

This strategy not only excels in longer and more intricate problem sets but also demonstrates integration potential with other prompting methods. However, its brilliance has its bounds. The technique’s accuracy can be marred by minor errors like concatenation mistakes, and its application is less effective in tasks requiring domain-specific decomposition.

Conclusion

In conclusion, least-to-most prompting is not just a step forward in AI's problem-solving capabilities; it's a leap towards bridging the gap between machine learning and the nuanced reasoning of the human mind. While it's not the ultimate solution for teaching reasoning to AI, it marks a significant advancement, nudging AI towards more efficient and human-like learning processes.

https://arxiv.org/abs/2205.10625

This is the only paper I could find about this. Are you going through relatively older papers and summarizing them, or did I miss a more recent paper?

Goodness, it's so crazy that this paper seems pretty old to me because its preprint was submitted ~1.5yr ago and it doesn't use GPT-4

Jz

I I'm