Teaching LLMs to Imitate You: Personalised Text Generation

LLMs can generate emails, tweets, fiction, etc. in your voice and style

TL;DR: The article introduces a multistage and multitask approach to train large language models for personalized text generation. Using five stages and the user's previous writings, the system can generate content in the user's unique style. This method shows significant improvement over previous approaches and has the potential for creating content tailored to specific audiences and needs.

Personalized text generation has been the latest frontier of LLM usage. The applications range from AI-assisted writings of various types of content such as tweets, news stories, scientific articles, and fiction, to transformation of given content like summarization or elaboration. The latest development in this area introduces a new multistage and multitask framework to teach large language models (LLMs) for personalized text generation, which offers a transformative approach to generating customized responses using documents previously written by the user.

The Multistage and Multitask Framework

The key innovation in this new approach lies in its multistage and multitask framework. The personalized text generation process consists of five distinct stages, including Retrieval, Ranking, Summarization, Synthesis, and Generation. Each of these stages plays a crucial role in generating content that reflects the unique voice and style of the user.

The Retrieval and Ranking Process

The retrieval and ranking stages form the foundation of personalized text generation. To generate content that aligns with the user's style and preferences, the system begins by formulating a query based on the title and the starting sentence of the content the user is writing. Using this query, relevant information is retrieved from a storage of the user's previous writings. The retrieved results are then ranked based on their relevance and importance to the user's current writing task.

Summarization and Synthesis

Once the relevant information has been ranked, the summarization stage condenses the ranked results into a summarized form. These summarized results, along with a few other synthesized key elements, are then input into an LLM for the final stage of generating the new content. This multi-step process, culminating in synthesis, forms the backbone of personalized content generation.

The Personalized Generation Task

In order to ensure that the LLM can effectively replicate the user's writing style, a multitask approach is adopted. Multiple intermediary tasks are used to make the LLM understand the style of writing of the user and then later on write in that style. In humans, it has been observed that writing proficiency correlates with reading abilities. The same principle is applied here, with the LLM being trained to understand and read the user's content first, and then produce content based on it. For the personalized generation task, the system is given the instruction to "Finish the passage in the user voice."

Checking for User Voice Match

To ensure that the generated content matches the user's voice, a separate instruction is issued, asking the system to "Predict whether two passages are from the same author." This serves as a verification step to confirm that the content generated reflects the unique voice of the user.

Data and Experimentation

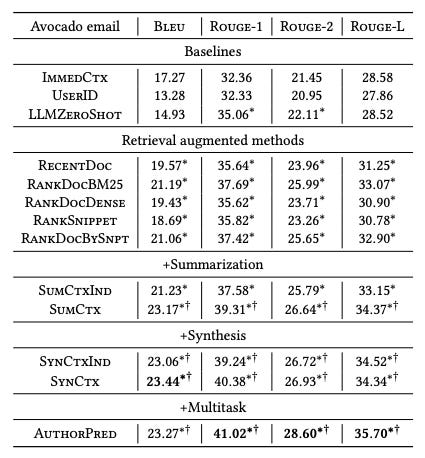

The proposed approach was evaluated on personal email communications, social media discussions, and product reviews, using three different datasets: the Avocado Research Email Collection, Amazon review data, and the Reddit comments dataset. The results obtained were promising. On all three datasets, the new approach demonstrated statistically significant improvement over previous methods.

The original research paper can be found here.

Conclusion

In conclusion, the multistage and multitask framework for teaching LLMs to personalize text generation represents a significant advancement in the field of personalized content creation. Its innovative approach to generating customized responses using documents previously written by the user has important implications for the development of generative systems that can cater to specific audiences, creation contexts, and information needs. As research in this area continues, we can expect to see even greater improvements in personalization in generative systems.

Interested in exploring the cutting-edge developments in AI-powered personalization? Subscribe to our newsletter to receive curated updates on the latest advancements in personalized text generation, AI writing assistance, and much more.